The Next-Generation Modelling System reaches a new milestone

May 2021 – GungHo celebrates its 10-year anniversary by starting to run its first weather and climate simulations

Introduction

This year, the GungHo project celebrated its 10th birthday. This was the project to redesign the Met Office’s dynamical core (the numerical algorithm at the heart of the atmospheric model – see Unified Model) for the next generation of supercomputers. GungHo was funded jointly by the Met Office, the Natural Environment Research Council (NERC) and the Science and Technology Facilities Council (STFC) and brought researchers and software engineers from across the UK together to design and implement the new dynamical core. In this article we reflect on the journey of the last 10 years and describe some recent developments.

Background

A fundamental way to improve the accuracy of weather and climate simulations is to refine the grid that is used by the computer to describe the atmosphere. However, refining the grid will require the computer model to perform more calculations, which means the simulation will take longer unless a more powerful computer is available. A large factor in the progress in weather forecasts over recent decades has been the amazing improvements in computer technology and the ability of our models to make efficient use of this power.

Over the next decade, the biggest super-computers are anticipated to reach the exascale – where they can perform over 1018 calculations per second. To keep pace with these developments, it is vital for the Met Office’s model to be able to exploit the capability of such computers to deliver ever more accurate weather forecasts and climate simulations.

One of the key parts of a weather or climate model is the dynamical core - the numerical algorithm that solves the equations governing fluid motion. The Met Office’s current dynamical core is known as ENDGame, and it describes the Earth using a latitude-longitude grid (see Figure 1). It has been known since the late 2000s that this grid will cause problems on future super-computers, which will rely on spreading the calculations involved in the simulation over ever-increasing numbers of computer processors. The challenge with a latitude-longitude grid is that the grid points cluster together as the North and South poles are approached, and the processors performing the calculations need to frequently communicate with one another. The rate of communication needed between these processors ends up causing a bottleneck for the whole model, meaning that using more processors gives barely any increase in speed.

Figure 1: (Left) a latitude-longitude grid of the sphere, like that currently used in ENDGame and the UM. The points converge at the poles, causing a scalability bottleneck on parallel super-computers. (Right) an example of the cubed-sphere grid that is used by LFRic and GungHo. Note the more uniform distribution of points.

GungHo and LFRic

The GungHo project was born in 2011 to plan a new dynamical core to exploit the next generation of super-computers. The initial focus was to investigate how to move away from the latitude-longitude mesh of the sphere. One major challenge was that many of the strengths of ENDGame came from the longitude and latitude lines – these are orthogonal to one another, facilitating a finite-difference discretisation scheme with many desirable properties. Other meshes of the sphere might not be orthogonal, so a new discretisation would be necessary to retain those desirable properties, [1].

By 2014 development was focused on a dynamical core using a cubed-sphere grid (see Figure 1), which it had been shown could still achieve those same properties through a mixed finite-element formulation [2,3]. Named after the project, this new dynamical core is also called GungHo. However, the infrastructure for the Unified Model (UM), the current forecasting system, was fundamentally based around a latitude-longitude grid using a finite-difference scheme. The decision was therefore taken to design and build a new infrastructure that would: a) work in tandem with GungHo; b) be more flexible in terms of what grid it can use; and c) be more flexible to changes in future super-computer architectures. This new infrastructure is called LFRic, named after Lewis Fry Richardson, an early pioneer of numerical weather forecasting. The development of LFRic involves close collaboration with STFC.

As an operational dynamical core contains a large number of complex parts, it is vital to break down development into smaller and simpler steps. One helpful way to do this is to try to solve simpler equations than those used in operational weather forecasts. Much of the early development of GungHo used the shallow-water equations, which have fewer variables and only simulate horizontal fluid motion.

Similarly, when it was time to progress to the full set of equations used in weather and climate predictions, first development was done in flat Cartesian geometry, avoiding complications involved with the curved geometry of the sphere. These early steps in Cartesian geometry are described by Melvin et al (2019) [4]. Work continued to spherical domains when there was confidence in the results in these Cartesian domains; it’s important to walk before you can run!

One particularly key step in the evolution of LFRic was the inclusion of PSyclone. This is a tool developed by STFC, and underpins the novel code structure used in LFRic. The concept motivating this is the “separation of concerns”, in which the code describing the science is separated from the code that is used to optimise the performance on a parallel supercomputer, which can be auto-generated by PSyclone [5]. PSyclone was first used with LFRic in 2016. For more details on the design of GungHo and LFRic, see this previous research news article: GungHo and LFRic.

Evolution of the Next Generation Modelling Systems

As work on GungHo and LFRic progressed, it became clear that many other parts of the Met Office’s modelling systems might also need major changes to adapt to the next generation of super-computers. This is particularly true of the other parts of the modelling system that will interact with LFRic, such as data assimilation or the ocean models. This led to the launch of the Next Generation Modelling Systems programme, which encompasses many of the changes anticipated by the Met Office over the next decade and which has become one of the Met Office’s corporate strategic actions and a theme in its Research and Innovation Strategy. The Next Generation Modelling Systems Programme’s aim is to reformulate and redesign our complete weather and climate research and operational/production systems to allow the Met Office and its partners to fully exploit future generations of supercomputer for the benefits of society. It covers the atmosphere, land, marine and Earth system modelling capabilities and ranges from observation processing and assimilation through the modelling components to verification and visualisation.

Recent developments

Over the last couple of years, work has continued at pace. A significant step was the coupling of the dynamical core to the physics parametrisations, those parts of the atmosphere model that are responsible for representing the effects of processes that cannot be resolved on the grid. As with the development of the dynamical core itself, this starts with the simpler steps such as the coupling of moisture processes like evaporation and condensation. The first major aim was then to simulate the atmosphere of an “aqua-planet”, which is a planet with no land or hills, just covered by a “flat”, still ocean. The milestone of stably simulating 1000 days of such an atmosphere was achieved in mid-2020.

Simulating the atmosphere of the real Earth is made considerably more complicated by the presence of the land. Introducing this means that the bottom surface of the atmosphere model is no longer smooth, as it follows the shape of hills and mountains. Another important step was to increase the “lid” of the model from 40 km to the operational value of 80 km. This is so that it better captures important stratospheric processes that impact our weather and climate.

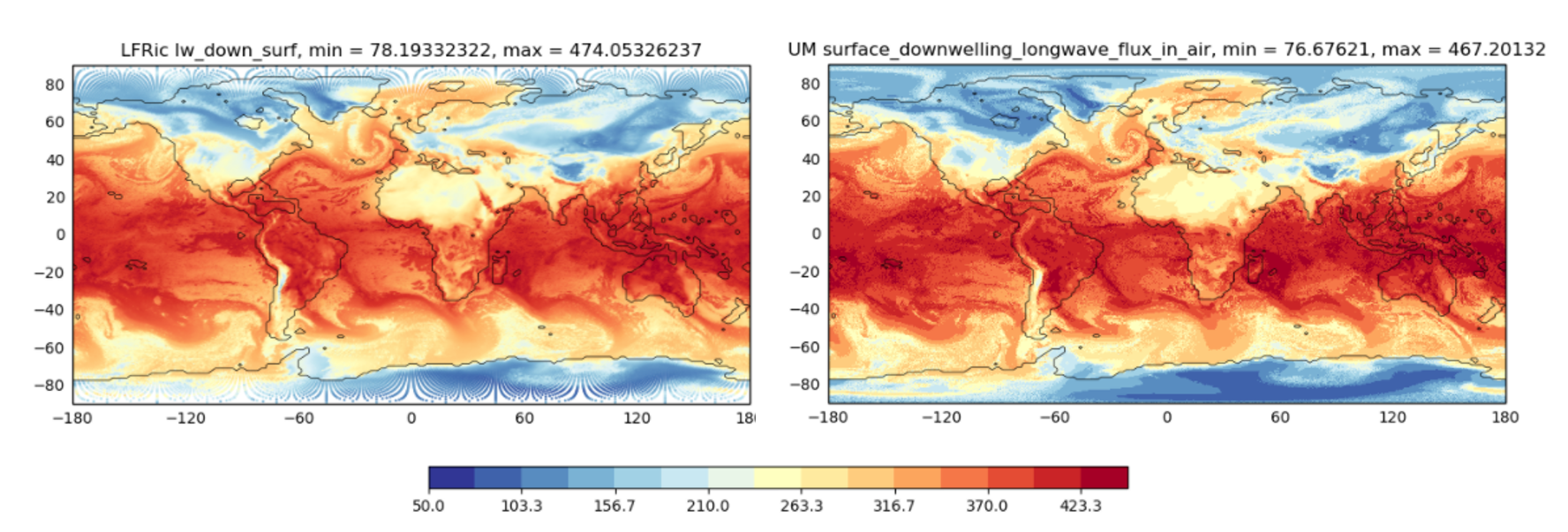

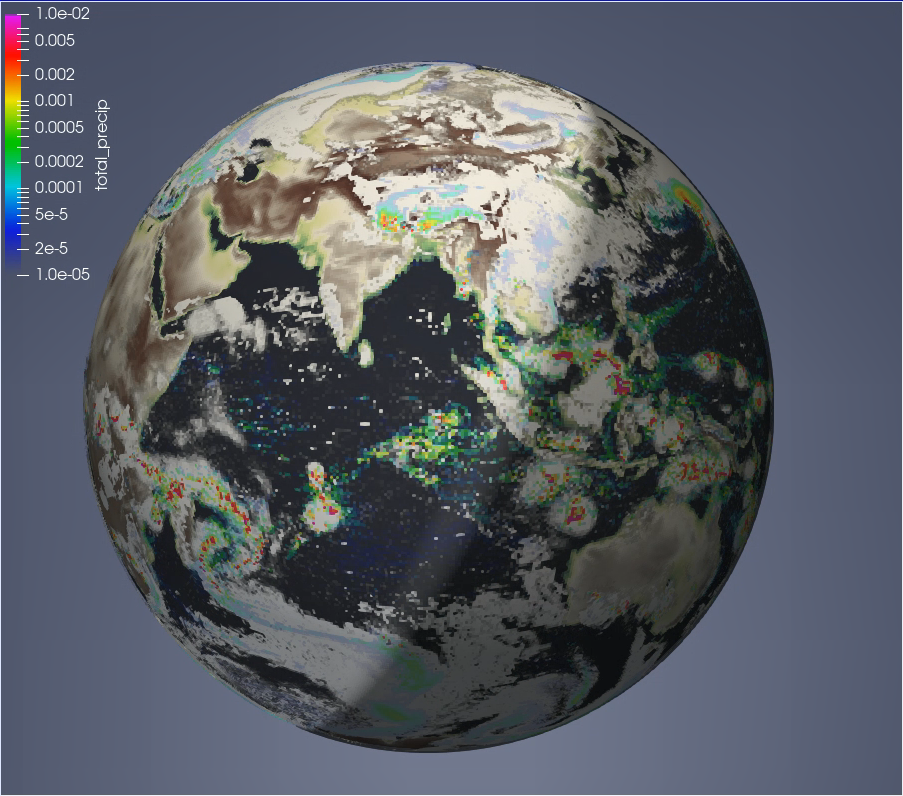

With these developments, in the first months of 2021 GungHo was able to run both its first 5-day global weather and 1-year climate simulations. The results have been very encouraging, looking comparable to those from the UM. These are illustrated in Figures 2 and 3.

Figure 2: Comparisons taken from day 3 of a 5-day global weather forecast of the downwelling long-wave radiative flux at the Earth’s surface. The left image is LFRic, which compares well to the UM output shown on the right.

Figure 3: A graphic of LFRic combining clouds, surface precipitation and radiation. The visualisation systems used for these plots was made available by NIWA.

Future plan

So far development with the physics parametrisations has focused on global models. One of the next key stages is to be able to run regional weather forecasts on a limited area model and to include more complex physical parametrisations. Further down the line, GungHo and LFRic will be integrated with the data assimilation systems and coupled to ocean and chemistry models.

There is still a long path to tread before GungHo and LFRic finally go operational, provisionally planned for 2026-2027, as the next generation of supercomputers becomes available. But as GungHo celebrates its 10th anniversary with the milestones of a 5-day global weather forecast and 1-year climate simulation, the prospect of an operational GungHo and LFRic starts to feel more real.

References

[1] Staniforth, A. and Thuburn, J., 2012. Horizontal grids for global weather and climate prediction models: a review. Quarterly Journal of the Royal Meteorological Society, 138(662), pp.1-26.

[2] Cotter, C.J. and Shipton, J., 2012. Mixed finite elements for numerical weather prediction. Journal of Computational Physics, 231(21), pp.7076-7091.

[3] Thuburn, J. and Cotter, C.J., 2012. A framework for mimetic discretization of the rotating shallow-water equations on arbitrary polygonal grids. SIAM Journal on Scientific Computing, 34(3), pp.B203-B225.

[4] Melvin, T., Benacchio, T., Shipway, B., Wood, N., Thuburn, J. and Cotter, C., 2019. A mixed finite‐element, finite‐volume, semi‐implicit discretization for atmospheric dynamics: Cartesian geometry. Quarterly Journal of the Royal Meteorological Society, 145(724), pp.2835-2853.

[5] Adams, S.V., Ford, R.W., Hambley, M., Hobson, J.M., Kavčič, I., Maynard, C.M., Melvin, T., Müller, E.H., Mullerworth, S., Porter, A.R., Rezny, M., Shipway, B.J. and Wong, R., 2019. LFRic: Meeting the challenges of scalability and performance portability in Weather and Climate models. Journal of Parallel and Distributed Computing, 132, pp.383-396.